RAG: Technology that combines power of LLM with real-time knowledge sources

What is RAG?

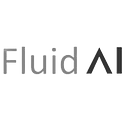

RAG (Retrieval-augmented generation) is a technique for enhancing the accuracy and reliability of large language models (LLMs). It does this by allowing the LLM to “consult” an external knowledge base for relevant information before generating a response. It uses a retriever to select relevant documents from a large corpus and a generator to produce text based on the retrieved documents and the input query.

Think of it as giving the LLM a personal library to check facts and details before speaking. This helps LLMs generate more accurate and reliable outputs by grounding them in factual information.

How does it work?

- Gathering data: RAG’s knowledge base feasts on a diverse buffet of information. This can include text data like news articles, research papers, and customer feedback, as well as structured data like databases and knowledge graphs.

- Input: You ask any question or give a prompt to the RAG based system.

- Information Retrieval: When RAG receives a query, the knowledge base springs into action. It retrieves relevant information from its vast repository, considering keywords, context, and user intent.

- Fact checking and verification: To ensure accuracy, the knowledge base cross-references extracted information with external sources like factual databases and trusted websites. This helps combat misinformation and biases.

- Prompt generation: Based on the retrieved information, the knowledge base generates prompts that guide the LLM (large language model) in RAG. These prompts provide context and direction for the LLM’s creative generation process.

- Augmentation: The retrieved information is combined with the LLM’s internal knowledge and understanding of language.

- Generation: The LLM uses this combined knowledge to generate a response that is more accurate, factual, and relevant to your input.

Examples of RAG applications:

- Question answering systems: Generate more accurate and informative answers to your questions.

- Summarization: It can easily produce summarized version of the large documents & reports

- Creative writing assistants: Help the LLM generate more realistic & fact based content along with adding its creativity to generate different content pieces

- Code generation: Generate more reliable and efficient code based on specific requirements.

Generative Pre-training Transformer (GPT) and Retrieval-Augmented Generation (RAG) are powerful tools in the realm of Natural Language Processing (NLP)

Lets have a look at the breakdown of their key differences:

Focus:

GPT focuses on internal language modeling. It learns the statistical relationships between words and phrases within a massive dataset of text and code. This allows it to generate creative text formats like poems, code, scripts, musical pieces, and more.

RAG focuses on external knowledge integration. It leverages a pre-trained language model (like a GPT) but also consults external knowledge sources like documents or databases. This allows it to provide more factual and grounded responses based on real-world information.

Architecture:

GPT typically has a single-stage architecture. The input prompt is fed directly to the model, and it generates an output based on its internal knowledge of language patterns.

RAG has a two-stage architecture. In the first stage, it retrieves relevant information from the external knowledge source based on the input prompt. In the second stage, it uses this retrieved information along with the original prompt to generate a response.

Strengths:

GPT excels at creative tasks like generating different text formats, translating languages, and writing different kinds of creative content. It can also be used for question answering, but its accuracy might be limited to the information contained in its training data.

RAG excels at providing factually accurate and contextually relevant responses along with the creative tasks. It’s particularly useful for tasks like question answering, where reliable information retrieval is crucial.

Weaknesses:

GPT can be prone to generating factually incorrect or misleading information, especially when dealing with open-ended prompts or topics outside its training data.

The quality of its responses depends heavily on the quality and relevance of the external knowledge source.

In essence:

Imagine GPT as a talented storyteller who only relies on their imagination and memory. They can weave captivating tales, but their stories may not always be accurate or true to reality.

RAG is like a storyteller with access to a vast library of books. They can still use their imagination, but they can also draw information and facts from the books, making their stories more grounded and reliable.

Approaches to LLM Maintenance and Customization

Traditional approaches to work with LLM rely primarily on large, pre-trained models and publicly available datasets, with limited options for fine-tuning and customization.

Moreover, maintaining these models would require periodic manual updates and retraining based on new pre-trained models released by developers. This leads to high cost, resource-intensive processes and time-consuming updates, to keep the model updated.

Alternatively, a new approach is to leverage LLMs that can learn and adapt continuously from new data and experiences. This eliminates the need for frequent retraining the entire model, making it more relevant and up-to-date.

Continuous learning and adaptation techniques, such as active learning, transfer learning, meta-learning, and real-time data integration, allow the LLM to continuously adjust its internal parameters based on new information. This improves the model’s performance over time.

The knowledge base of the LLM is constantly evolving, as new data is added and user interactions occur, the RAG based system updates its understanding and refines its reasoning capabilities, ensuring that relevance and accuracy are maintained at the forefront.

Let look at some reasons why Retrieval-Augmented Generation (RAG) has emerged as a critical need in the field of Natural Language Processing (NLP):

- Addressing Factuality Issues:

Large Language Models (LLMs) like GPTs, while impressive in their fluency and creativity, often struggle with factual accuracy. Their responses can be biased, factually incorrect, or simply lack grounding in real-world knowledge. RAG overcomes this limitation by injecting external data sources, ensuring responses are informed by actual facts and evidence. - Enhanced Adaptability and Relevance:

LLMs trained on static datasets become outdated with time, failing to capture the ever-evolving knowledge landscape. RAG, by integrating real-time information retrieval, adapts to dynamic environments and keeps responses relevant to current events and trends. This is crucial for real-world applications like news bots or dialogue systems. - Improved Transparency and Trust:

Many users find LLM responses opaque and lack confidence in their reliability. RAG, with its source attribution, provides transparency into the data used to generate a response. This transparency fosters trust and allows users to evaluate the credibility of the information presented. - Mitigating Data Leakage and Bias:

LLMs trained on massive datasets can inadvertently absorb biases and harmful stereotypes present in the training data. RAG, by selecting relevant information from specific sources, helps mitigate data leakage and bias in the generated responses. - Overcoming Writer’s Block and Semantic Gaps:

RAG can assist writers facing creative roadblocks by suggesting relevant facts, ideas, and examples based on their initial prompt. This can help bridge semantic gaps between the user’s intent and the LLM’s understanding, leading to more consistent and on-topic responses. - Broader Scope of Applications:

RAG’s ability to ground responses in factual data opens up new avenues for NLP applications. It can be used in scientific question answering, information retrieval systems, personalized education platforms, and many more.

Implementing RAG can be exciting, but it also requires careful planning and consideration

RAG address several common problems faced with traditional AI implementations like Factual errors, Lack of context and relevance, Black box nature & lack of adaptability to evolving knowledge. Before implementing RAG, organizations should take into account the following considerations.

- External data source: Choose a relevant and reliable source of information that aligns with your use case. This could be a knowledge base, document repository, or even real-time data feeds.

- Integrations: Assess how RAG will integrate with your current workflows,retrieval component, software, API acesses and existing AI projects. Seamless integration minimizes disruption and maximizes efficiency.

- Model licensing: The cost of licensing pre-trained language models can be significant. Research cost options and consider potential return on investment.

- Selection of LLM: Choose a suitable LLM model based on your needs and available resources. Consider factors like domain-specific knowledge, size, and computational requirements (processing power, storage).

- Fine-tuning: Fine-tune the chosen GPT model on your specific data to improve its performance and adaptation. This can involve techniques like task-specific training or prompt engineering.

- Model development and maintenance: The initial investment for developing and fine-tuning a RAG model can be significant. Consider ongoing maintenance costs and potential upgrades.

- Scalability: Consider the anticipated volume and growth of data your RAG system will handle. Choose scalable infrastructure and tools to accommodate future needs without performance bottlenecks.

- Security and privacy: Implement robust security measures to protect user data and ensure the integrity of the retrieved information.

- Prompt engineering: Craft effective prompts that guide the LLM towards the desired information and response format. This can significantly influence the quality and relevance of RAG’s outputs.

- Explainability and transparency: Consider techniques to explain how RAG arrives at its responses. This can build trust and allow users to understand the reasoning behind the generated text.

- Monitoring and evaluation: Continuously monitor RAG’s performance and accuracy. Track key metrics like retrieval success, response relevance, and user feedback. Use this information to refine your data sources, model tuning, and overall system performance.

- Feedback mechanisms: Develop feedback channels to gather user input on RAG’s performance and continuously improve the system based on real-world experience.

- Pilot projects: Start with small pilot projects to test RAG’s effectiveness and address any integration or performance issues before full-scale deployment.

Fluid AI: An innovative RAG-based platform for data-driven AI applications

Knowledge repository: Fluid AI’s knowledge base functions as a vast repository, comprehensive library, which has capability to assimilate all sort of information of your organisation, including your private and real-time data. Users can easily upload their documents with support formats including PDF, TXT, CSV, JSON, DOCX, one can simply paste the URL of web page or even youtube link. By using this data to create a knowledge base, GPT Copilots is capable to process and leverage such diverse data sources adeptly & users have access to most current and accurate information.

- Retrieval and generation component: Fluid AI is Retrieval Augmented Generation (RAG) based platform designed to augment the capabilities of AI applications, this approach integrates with 3 pivotal elements of AI: retrieval augmentation and generation. Simply, searching for relevant information, then it combines the data with its extensive pre trained knowledge & understanding, to generate the most relevant response/ output.

- Integration of private or real-time data: the knowledge base built by your organisation on both their private and public information by feeding structured as well as unstructured data helps Fluid AI’s GPT Copilot to be more smart & valuable. Fluid AI further customize the LLM on this organisation internal data making them more reliable & work exceptionally well for any specific domain or task.

- Easy to use Interface- We have made things easy for our customers where you dont have to go through any complex processes or training we have already done all the hard work for you. By building the user friendly interface, by just uploading & Updating data sources, rather than retraining the entire model, allowing RAG based pilot to stay up-to-date with the latest information & provide more accurate and informative answers to factual queries by accessing relevant information from knowledge bases and other sources.

- Enhanced relevance & Contextual understanding: Fluid AI GPT Copilot remember past interactions. This helps the copilot to understand context during conversations, making conversations feel more human, seamless engaging and efficient.

- Factual accuracy: Fluid AI GPT Copilot being RAG based platform avoids misleading information by integrating organisation private data & real-world data along with its pretrained knowedge. By simply asking any query in natural language, the Copilot retrieves factually accurate information from the knowledge base and combines it with pre-trained knowledge for more human-like conversations. Additionally, Fluid AI incorporates an Anti-Hallucination shield and provides reference snippets to inform users & avoid the black box senarios

- Data security: Fluid AI strongly emphasis on data security. With Fluid AI Now you dont have to worry about data privacy & retention, you can choose from our secure Private Deployment option or explore the flexibility of public and hybrid hosting. Further different instances are built for various teams within organizations, ensuring access to the right data for the right personnel while safeguarding sensitive information.

- Go live instantly- With Fluid AI organizations can effortlessly work with any LLM along with easy one switch updation to the latest versions of it. No complex training/processes required, easy implementation & take it live in just days

Wrapping up

Organizations are drowning in data, with valuable insights buried under mounds of reports and customer interactions. Traditional AI struggles to navigate this complexity, often generating generic responses or factually inaccurate content. This leads to lost efficiency, frustrated customers, and missed opportunities.

Organisations cant go & simply pick LLM’s out there and expect it to work wonders, LLMs struggle to understand the nuances of organizational contexts & often operate like black boxes, making it difficult to understand how they arrive at their outputs. This lack of transparency can hinder trust and limit the adoption of AI solutions within organizations, & also can be computationally expensive to run and require specialized expertise for maintenance.

Thats where Fluid AI comes in, we are first company to bring the power of LLM’s to the organisation with additional Enterprise required capabilities, easy to use interface, ensuring security & privacy of data. Organisations now dont need to worry about hiring new tech/dev workforce, investing months & years to do complex training, & struggle to keep up the latest tech.

Fluid AI’s Copilot brings the power of explainable AI (XAI), shedding light on how RAG arrives at its outputs. Forget months of customization, training or complex coding. Fluid AI makes RAG readily accessible and easy to use, even without a dedicated AI team & ensures continuous updation of technology so your Copilot always operates at the cutting edge.